-

While Stormtrack has discontinued its hosting of SpotterNetwork support on the forums, keep in mind that support for SpotterNetwork issues is available by emailing [email protected].

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

State of the Chase Season 2024

- Thread starter adlyons

- Start date

- Status

- Not open for further replies.

Jason N

EF5

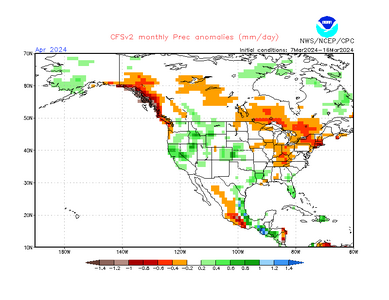

I wonder how this will look over next few weeks with the recent cut off low and next 2 systems expected to provide some decent snows across AZ/NM.. will you repost this in a couple weeks? it will be interesting to look back on this image here.Still bone dry out west. A lot can change change in 30-45 days, but "El Ninõ" is not cranking it out like expected. Neither the GFS or ECMWF show much in precipitation accumulations over the driest regions for the next 10+ days. Hopefully, we will avoid another "dust" year where visibility is cut throughout the alley.

View attachment 24620

Last edited:

Warren Faidley

Supporter

I wonder how this will look over next few weeks with the recent cut off low and next 2 systems expected to provide some decent snows across AZ/NM.. will you repost this in a couple weeks? it will be interesting to look back on this image here.

The problem with Pacific configurations this time of year for SW weather is that they generally bring their own RH, which is **usually** not all that impressive by the time it reaches the E-NM and W-TX areas. It really takes the GoM influx in April to bring heavy rains. I'm not too concerned unless the end of April is still bone dry. I've seen dry conditions vanish quickly if there is a good pull of Gulf RH northwestward leading to steady or heavy precipitation.

Mike Smith

EF5

I just got finished looking at today's 12Z ECMWF and UKMET and, wow, for this weekend,

The ECMWF has SVR in the warm section and heavy snow in the cold sector. Surface pressure over Kansas Sunday is down 982. The ECMWF usually has a northward bias in situations like these.

While this is way too soon to get specific, I believe there is a good chance of SVR this weekend.

The ECMWF has SVR in the warm section and heavy snow in the cold sector. Surface pressure over Kansas Sunday is down 982. The ECMWF usually has a northward bias in situations like these.

While this is way too soon to get specific, I believe there is a good chance of SVR this weekend.

Andy Wehrle

EF5

I just got finished looking at today's 12Z ECMWF and UKMET and, wow, for this weekend,

The ECMWF has SVR in the warm section and heavy snow in the cold sector. Surface pressure over Kansas Sunday is down 982. The ECMWF usually has a northward bias in situations like these.

While this is way too soon to get specific, I believe there is a good chance of SVR this weekend.

We can delve more into this in Target Area threads as needed, but I'm seeing pretty consistent moisture problems for this weekend/early next week, with both GFS and Euro-AIFS depicting a low spinning up in the Gulf that moves up the East Coast late this week into the weekend, with its associated cold front scouring out the Gulf and leaving only a couple of days for partial recovery ahead of the system early next week.

Moisture issues are obviously not unheard of this time of year, and I've already been surprised multiple times just in the last two years by how low of a dewpoint in which significant tornadoes can occur (low 50s for Winterset 2022, mid-to-upper[at best] 40s for Evansville, WI last month). Just one of those flies in the ointment that will go a long way toward determining the ceiling of the setup.

Mike Smith

EF5

My 2¢...

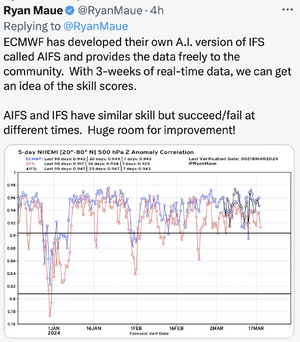

As we move deeper into tornado season 2024, I'm sure everyone wants to make the best forecast possible. So, I'm not certain why so many refer to the GFS. Dr Ryan Maue posted these today and they show just how awful the GFS is -- at times at 1980's levels (really).

In order, the verification stats are better for the ECMWF, UKMET and Canadian than the GFS. I've gotten to the point where I don't even bother to look at the GFS.

I don't have a feel for the AI-ECMWF but I would probably approach it with caution until we have a couple of months under our belts to get a feel for it. In theory, AI in meteorology has a lot of promise but I think we are probably still in the "theory" time period.

Finally, I wish to quote Dr Tom Stewart, then of SUNYA Albany, who did the first-ever studies of human factors in meteorology. Those studies in aviation have revolutionized safety and cockpit order. He found, "meteorologists love more models because they make them feel more confident, but more models do not make them more accurate."

As we move deeper into tornado season 2024, I'm sure everyone wants to make the best forecast possible. So, I'm not certain why so many refer to the GFS. Dr Ryan Maue posted these today and they show just how awful the GFS is -- at times at 1980's levels (really).

In order, the verification stats are better for the ECMWF, UKMET and Canadian than the GFS. I've gotten to the point where I don't even bother to look at the GFS.

I don't have a feel for the AI-ECMWF but I would probably approach it with caution until we have a couple of months under our belts to get a feel for it. In theory, AI in meteorology has a lot of promise but I think we are probably still in the "theory" time period.

Finally, I wish to quote Dr Tom Stewart, then of SUNYA Albany, who did the first-ever studies of human factors in meteorology. Those studies in aviation have revolutionized safety and cockpit order. He found, "meteorologists love more models because they make them feel more confident, but more models do not make them more accurate."

Attachments

Warren Faidley

Supporter

I generally use one or the other, then compare the two for some type of average guess. There is also non-modeling logic to be applied, e.g., a bone dry GoM takes time to recharge.

gdlewen

EF4

FWIW I check the GFS while the other publicly-available models are out of range. Once a pattern stops flickering in-and-out-of existence I start paying more attention. For example, on 3/7 an alert popped up on my phone, "Possible Panhandle Chase". I don't recall when I logged that entry, since by 3/7 the event had shifted well east of the Panhandle, but there must have been some run-to-run consistency when I did. Other than that...no.

Jason N

EF5

I've always wanted to see a climatology of HP Vs. LP Zones across the plains. I can operationalize my targets to that effect, but I don't know if there was any long-term analysis on it.Moisture issues are obviously not unheard of this time of year, and I've already been surprised multiple times just in the last two years by how low of a dewpoint in which significant tornadoes can occur (low 50s for Winterset 2022, mid-to-upper[at best] 40s for Evansville, WI last month). Just one of those flies in the ointment that will go a long way toward determining the ceiling of the setup.

Jason N

EF5

Help me understand this better, at what percentage does "skill" fall below a threshold of accuracy that creates "distrust". I read in some forums that below .80 was considered the beginning of distrust due to accuracy issues but I am not entirely sure on that. What I am wondering in general terms is, is there some kind of logarithmic shift in error when a model falls below .80 .75?. So, when/if accuracy is being measured mostly by 500mb anomalies, if a models skill is say .75 on heights, it leads to a surface pressure being off by X Hpa or mb (I think I understand the concept?), I just don't see where I can visualize it on a chart.My 2¢...

As we move deeper into tornado season 2024, I'm sure everyone wants to make the best forecast possible. So, I'm not certain why so many refer to the GFS. Dr Ryan Maue posted these today and they show just how awful the GFS is -- at times at 1980's levels (really).

In order, the verification stats are better for the ECMWF, UKMET and Canadian than the GFS. I've gotten to the point where I don't even bother to look at the GFS.

I don't have a feel for the AI-ECMWF but I would probably approach it with caution until we have a couple of months under our belts to get a feel for it. In theory, AI in meteorology has a lot of promise but I think we are probably still in the "theory" time period.

Finally, I wish to quote Dr Tom Stewart, then of SUNYA Albany, who did the first-ever studies of human factors in meteorology. Those studies in aviation have revolutionized safety and cockpit order. He found, "meteorologists love more models because they make them feel more confident, but more models do not make them more accurate."

To add into this: on the topic of distrust. When we are harping on inaccuracy, what lens are we seeing it through.

- Scientific distrust (Very High Standard)

- Operational Distrust (High Standard)

- General use Distrust (Medium to low Standard)

probably some real lengthy answers could emerge here, and happy to take that to another feed if needed.

Mike Smith

EF5

Jason,

Because I am in the middle of some other projects, a couple of quick thoughts:

I was corresponding yesterday with a university PhD in meteorology who teaches synoptics and we both agree that too many meteorologists these days are making the art and science of forecasting and storm warnings too complex.

Because I am in the middle of some other projects, a couple of quick thoughts:

- 500mb is an "easier" forecast than surface features. So, a poor 500mb forecast will likely lead to a very poor surface forecast. Thus, my mentioning of this in the context of storm chasing. When the 500mb forecast is in the .70's you can pretty well count on a lousy surface forecast.

- In "ancient times" (intern dinosaurs brought the model output to us) in numerical weather forecasting, we had two models: the barotropic (always too far to the left) and baroclinic (usually too far to the right). They went out just 36 hours. If you averaged the two, you usually had a pretty good 500mb forecast. But, because they were new, in the early 1970's, we had to figure this out. I'm making this point because, as with CAMS about ten years ago, in 2024 we are entering a new period of complexity with trying to figure out AI-powered tools, especially since -- as last week demonstrates -- we haven't completely figured out the CAMS.

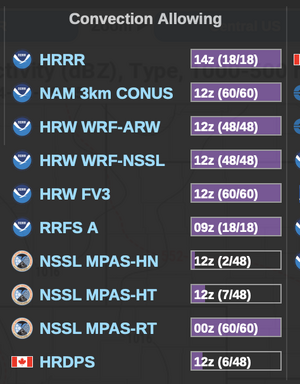

- Speaking of CAMS, when Pivotal Weather started, there were three convection-allowing models. Now, there are ten. I am highly skeptical that more CAMS will lead to better forecasts.

- Added this thought: I, of course, look at the SPC convective outlooks and NADOCAST. I find the latter to be surprisingly good.

I was corresponding yesterday with a university PhD in meteorology who teaches synoptics and we both agree that too many meteorologists these days are making the art and science of forecasting and storm warnings too complex.

Attachments

Jason N

EF5

completely agree with your observations. I was looking recently at the WoF's and its certainly interesting you brought up such a quick increase in CAMS over the past 5years even. I guess from my vantage of 25yrs experience in Operational Forecasting, and having VIV'd plenty of models, this aspect of confidence, skill, made me think about the ways in which we narrate confidence and prediction over time scales, moving from CAMS to Deterministic , to short to long range ensembles to SuB Ex, to straight climo.

Also agree, that while there is so much to emulate in the atmosphere through models and simulation. Generic pattern recognition is so valuable and kind of lost on those who are looking for those hyper exact details.

Models : Garbage in is Garbage out.

Also agree, that while there is so much to emulate in the atmosphere through models and simulation. Generic pattern recognition is so valuable and kind of lost on those who are looking for those hyper exact details.

Models : Garbage in is Garbage out.

Mike Smith

EF5

Generic pattern recognition is so valuable

Amen, It is vital not only to forecasting but to storm warnings, also. Yet, no one teaches it anymore.

One other thought: when NMC went to the PE model, it was a big step forward (it was quite good) and it went to 72 hours. And, we made skillful forecasts from it (check the stats). In the entire USA, there were three models -- period.

There was/is no need for literally dozens of flavors of models to make three-day forecasts. In some ways, we've become model-addicts. We can't stop ourselves.

Absolutely true story: At WeatherData, I had a brand new meteorologist from Michigan. The LFM model, which was quite good, showed a stationary front near the KS-OK border and a strong LLJ overnight. There was a 70% RH surface-500mb blob right over Wichita. I forecast "thunderstorms, some severe" overnight. Took maybe 15 minutes.

Because I was training him, I let him do his own thing (it was his first week) as he was training. He was buried in the models for ~2 hours. His forecast? "Clear." So, I sat him down and explained why I didn't think it was the correct forecast. He was upset and told me that my forecast was probably wrong because I hadn't looked at all of the models, which was true. He was absolutely adamant.

What happened? Tornado warning for Wichita ~4:30am. Then softball-sized hail with what at the time was the 11th most costly hailstorm in U.S. history.

We really can make this way too complicated. If something works, stay with it. If there is a shiny new thing, give it a test drive to see if it is truly a step up. But, never be afraid to stay with what works.

Jason N

EF5

Oh, this is not just you or your story. I believe this to be happening everywhere. "It", whatever "it" is, seems to be trying to replace the person with AI for the past 8-10yrs now?. There seems to be some belief that we can back end "code-out" all of the main parameters, remove the human, so we can ultimately reduce the labor force. That seems to be a strategic view, which implements policy budgets down to curriculum.Absolutely true story: At WeatherData, I had a brand new meteorologist from Michigan. The LFM model, which was quite good, showed a stationary front near the KS-OK border and a strong LLJ overnight. There was a 70% RH surface-500mb blob right over Wichita. I forecast "thunderstorms, some severe" overnight. Took maybe 15 minutes.

Because I was training him, I let him do his own thing (it was his first week) as he was training. He was buried in the models for ~2 hours. His forecast? "Clear." So, I sat him down and explained why I didn't think it was the correct forecast. He was upset and told me that my forecast was probably wrong because I hadn't looked at all of the models, which was true. He was absolutely adamant.

What happened? Tornado warning for Wichita ~4:30am. Then softball-sized hail with what at the time was the 11th most costly hailstorm in U.S. history.

We really can make this way too complicated. If something works, stay with it. If there is a shiny new thing, give it a test drive to see if it is truly a step up. But, never be afraid to stay with what works.

I haven't seen what Curriculum looks like these days so, I can't exactly speak to it and how that manifests itself into people's problem solving, big picture understanding and methodology to create a forecast. Instructors teach, and sometimes will be arbiters of the strategic vision. but I think you are experiencing what I have as well. The old school methods of teaching patterns, are being replaced by, "trust the model", but we have 8 to use, and 8 have different iterations of outcomes, 8 different biases, so you get stuck!, its literally "analysis paralysis.", whereas old school pattern people can define it in seconds and work out some of the details later, specifically for convective, or winter. which is where in my view, humans will always be in the loop.

I see the current generation of folks acting in much the same way as you described.

Last edited:

adlyons

EF2

Going a bit off topic fellas. I think a separate thread (if one doesn't already exist) for model related discussion, bias, ect would be a valuable addition.

- Status

- Not open for further replies.

Similar threads

- Replies

- 59

- Views

- 8K

- Replies

- 443

- Views

- 48K

- Replies

- 18

- Views

- 5K