IMO, this was a pretty signfiicant forecast bust for anyone who was saying things looked scary or dangerous or who thought a high risk was warranted. As can clearly be seen on the second page of this thread, I was highly skeptical of the nature of this setup from a long way out, including not hopping on the hype train last Tuesday when the 12Z GFS came in and first showed a pattern that, in the past, has been associated with significant tornado outbreaks. I think anyone who is minimally biased about severe weather and chasing and who took a serious look at the details should have, and in most cases actually did, recognize the high level of uncertainty and poor predictability of this event, and thus were hesitant to hype it up and suggest an outbreak or significant severe weather was going to occur. Events like this are also the reason I don't get excited about ANY forecast that suggests sig severe if it's more than 3 or 4 days out still.

Here are the signs I saw that this event was anything but a slam dunk outbreak, and additionally, may have ended up being a null event:

-Significant and persistent disagreement among various model cores (NAM, GFS, ECMWF, FIM, SREF, etc), regardless of run-to-run consistency within any given model core. This is a HUGE red flag when it comes to predictability or certainty.

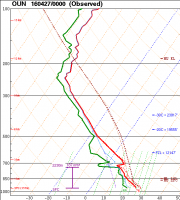

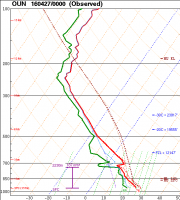

-Consistently unrealistic representation of PBL structure in the GFS (i.e., skin deep moisture with essentially no mixed layer above it). The 00Z OUN sounding verifies the poor physics representation in the PBL:

Yes, the PBL was not perfectly mixed in the real world, and the real rich moisture did drop off pretty quickly with height, but GFS forecasts were showing much more significant near-surface dewpoint lapse rates.

-Consistent overforecasting of surface moisture by the NAM. It overdid moisture on Sunday, Monday, and Tuesday. The SREF, which is typically less biased (and usually the NAM is near or above the top of the SREF distribution), also overforecast moisture. The overforecast on Sunday was quite minor, but Crook (1996) is a great reference for how important a difference of just 1 g/kg in mixing ratio means in terms of convective activity. And we saw what that meant south of basically U.S. 400 Sunday. On Monday and Tuesday, NAM forecasts of 2-m dewpoint were generally about 5 F too high.

-The "veer-back" wind profile in the low-levels was persistent in many models. You cannot ignore this feature when it shows up and just assume that "trough" means the wind profile will just magically look great.

Between the various model cores, the ingredients for a significant severe weather outbreak were there. But that is irrelevant if those ingredients do not occur together in space and time. Who cares if you have 4000 MLCAPE if it's displaced from the area with 40+ kts of 0-6 km shear? Who cares if you have THOSE two ingredients together if there is no trigger mechanism? Who cares if you have great deep layer shear if there is no low-level shear? Sure, sometimes high cape can make up for weak shear in certain environments, but I get the feeling that some people use that statement to wishcast when shear appears too weak for supercells.

I personally disagreed with SPC's forecasts from day 2 and on.

@Mike Marz asked me in chat what I would've done for the day 2 outlook, and I told him I would've kept an "enhanced" risk category (although I probably could've been talked into putting a very small "moderate" in NC KS). I think the level of uncertainty should've precluded a "moderate" risk. I was bothered that the language in the convective outlook that mentioned high risk was included, because it made me think the forecasters wanted to go with a "high" risk, which I think would've been an even bigger mistake. I also saw some AFDs from the Wichita and Norman offices calling for a tornado outbreak that I thought was too strongly worded. I very much disagreed with the issuance of a PDS tornado watch. It was never obvious that the ingredients for widespread significant tornadoes was present or would come together when that watch was issued (and that was a huge watch in terms of areal coverage). The veer-back pattern was clearly evident in VADs from KTLX, and later on, KFDR. Coupled with the fact that dewpoints were struggling to stay in the upper 60s and generally weren't making it to 70, and lack of a real focused dryline south of I-40 (and even uncertainties in the identity of the boundary north of that), I think a watch of that magnitude was a mistake. I personally told my chase partners yesterday I would've gone something more like 70-40, and even that was a huge overforecast.

A few summary points:

-'S'-shaped hodographs (i.e., those with veer-back in the lowest few kilometers) suck because they screw up the dynamics and how wind shear generates the vorticity that is converted into a mesocyclone that defines supercells. Storm modes are typically messy when S-shaped hodographs are present, even if 0-6 km shear exceeds the typical threshold value for supercells (40 kts). At the same time, however, an S-shaped hodograph does not mean tornadoes can't or won't occur. If there is enough low-level shear, you can still get tornadoes with such hodographs. It's just harder for the atmosphere to produce them.

-Never assume that things will just come together because the large scale pattern is broadly analogous to that from a past case that was associated with a severe weather outbreak or significant severe weather events.

-Details

always matter. Sometimes the smallest detail can make the difference between an outbreak and a non-event.

-Neither a single model run, nor two or three consecutive model runs confirm or deny that any event will or will not happen.

-Don't get hung up on one model core. It's 2016 and the meteorological community, both worldwide, and in the US, has advanced dramatically since the "old days" of the 80s, 90s, and even the 2000s. We have access to more information from more meteorological centers around the world than we ever have, and each one of them has legitimate NWP forecast systems that can provide useful and important information. For example, don't ignore the GFS just because you don't like what it did "that one time" or just because it has one particular aspect of the forecast wrong. That doesn't mean every other aspect of its forecast is also wrong, or that every future forecast it ever makes will always be wrong.

-This last point is part of the point above, but is so important I had to give it its own separate dash:

take advantage of ensembles. Ensembles are the way of the future (actually, they've been the best way of doing things since operational ensemble forecasting began in the early 1990s, but they've been improving on the mesoscale and storm-scale rapidly over the last 10 years), and everyone on this forum would do themselves a lot of good to learn how to use them and use them consistently when looking at upcoming weather events, whether severe convective storms, tropical cyclones, winter storms, or whatever else. Ensembles offer a dimension of information that deterministic models can't, because they estimate uncertainty and can show you how widely different the future atmospheric state could be if some part of the forecast process happened to be wrong (and forecast error is always present, but from different aspects of the forecast process).