gdlewen

EF4

I'm opening this event thread to discuss the supercell formation yesterday, July 17, 2023, NE of DDC. This could be part of a discussion following @Mike Smith 's post on the NWS-DDC ( Dodge City NWS and the Astonishing Decision Not to Issue a Tornado Warning Earlier today ), but I want to focus on the process of anticipating convective initiation.

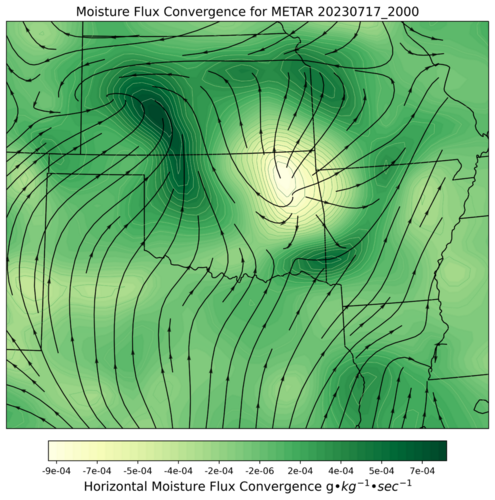

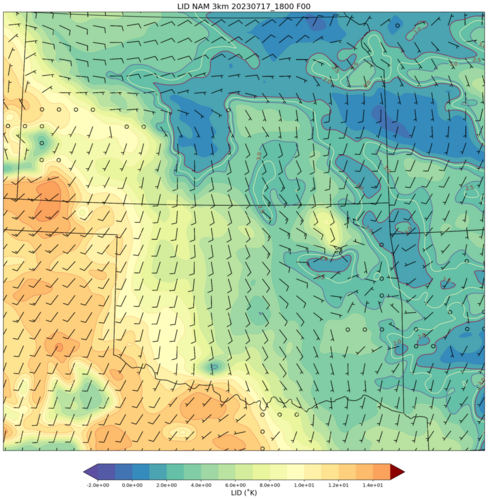

I am hoping to gain some insight into the "orders of magnitude" in factors relevant to convective initiation in situations like yesterday afternoon. There were no "non-synoptic soundings" in the area, and the NAM 3km showed weak capping. A weak cold front was draped over central KS just south of I-70, but air mass contrast across the boundary was fairly low.

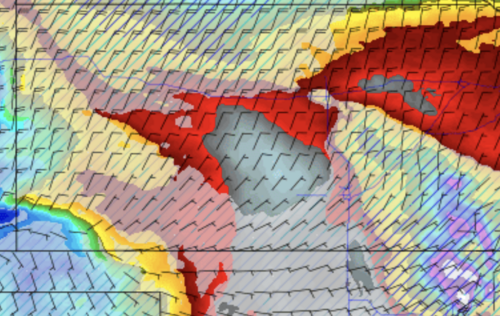

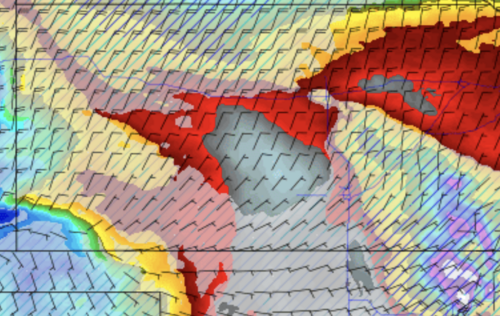

Convective temperatures were at or below the observed temperatures in the region, and MLCAPE values were on the order of 4000-5000 J/kg. At the same time, MLCIN values were low: on the order of 0 to -5 J/kg. Here's a screen shot of the CoD NAM 3km MLCAPE/MLCIN for 20Z yesterday:

The NAM 3km model did predict discrete supercell formation in the range 20-21Z in the area. This area was on the western margin of the SPC SLGT risk polygon.

At about 315PM CDT (2015Z), a collision of two boundaries initiated convection, which quickly became severe:

View attachment KDDC_20230717_2114.mov

From reading @Mike Smith 's blog post (c.f. above link), it seems he predicted the threat, but it wasn't until MCD#1601, issued nearly 30 minutes later, that the threat was acknowledged by SPC. The SPC forecasters mentioned, "The cap has been breached across south-central KS, with explosive development recently noted with a supercell...", and then went on to delineate all the factors that would make any convection that managed to develop potentially severe.

Finally, my question: Given the weak capping and conditions favoring severe convection in the area should it develop, what goes into the decision to issue a MCD or weather watch. In other words, what information did the forecasters at DDC or SPC have that inhibited them from issuing any guidance until after the fact? This is not a complaint, nor an attempt to malign anyone, but rather an attempt to reason from incomplete information. It's pretty obvious they have access to far more information than has the general public...what subtle factors were at play here?

I am hoping to gain some insight into the "orders of magnitude" in factors relevant to convective initiation in situations like yesterday afternoon. There were no "non-synoptic soundings" in the area, and the NAM 3km showed weak capping. A weak cold front was draped over central KS just south of I-70, but air mass contrast across the boundary was fairly low.

Convective temperatures were at or below the observed temperatures in the region, and MLCAPE values were on the order of 4000-5000 J/kg. At the same time, MLCIN values were low: on the order of 0 to -5 J/kg. Here's a screen shot of the CoD NAM 3km MLCAPE/MLCIN for 20Z yesterday:

The NAM 3km model did predict discrete supercell formation in the range 20-21Z in the area. This area was on the western margin of the SPC SLGT risk polygon.

At about 315PM CDT (2015Z), a collision of two boundaries initiated convection, which quickly became severe:

View attachment KDDC_20230717_2114.mov

From reading @Mike Smith 's blog post (c.f. above link), it seems he predicted the threat, but it wasn't until MCD#1601, issued nearly 30 minutes later, that the threat was acknowledged by SPC. The SPC forecasters mentioned, "The cap has been breached across south-central KS, with explosive development recently noted with a supercell...", and then went on to delineate all the factors that would make any convection that managed to develop potentially severe.

Finally, my question: Given the weak capping and conditions favoring severe convection in the area should it develop, what goes into the decision to issue a MCD or weather watch. In other words, what information did the forecasters at DDC or SPC have that inhibited them from issuing any guidance until after the fact? This is not a complaint, nor an attempt to malign anyone, but rather an attempt to reason from incomplete information. It's pretty obvious they have access to far more information than has the general public...what subtle factors were at play here?