I don't know anything about who is behind this what-I-presume-is-a research experiment in forecasting tornado outlooks (compared to SPC), but this Twitter account called Nadocast showed up at least a few months ago and has posted 00Z and 14Z areal tornado forecast probabilistic outlooks daily during the summer and has also been open about posting historical forecasts and verification results.

From what I can gather, the makers of Nadocast are creating calibrated probabilistic grids of tornado probability from HREF products, likely using some degree of recent performance/AI to modify the weights from individual members, as well as trying a variety of composite products (i.e., SCP, STP, etc) to hone in on better forecasts.

Some of their forecasts are pretty impressive compared to operational products. Let's look at a few:

Image comparison format (per case): SPC 06Z Day 1 (left), 00Z nadocast for next day (right)

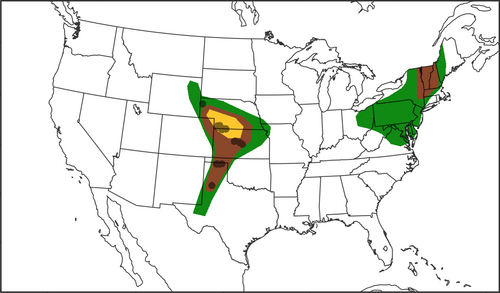

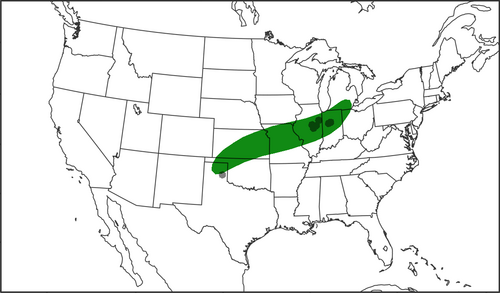

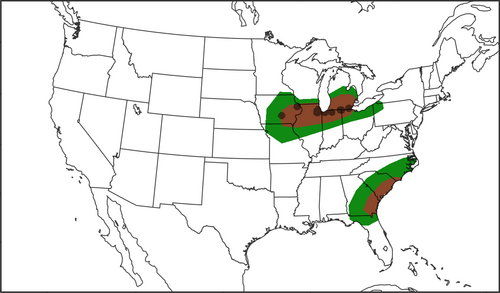

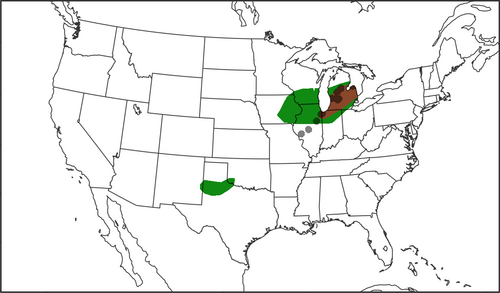

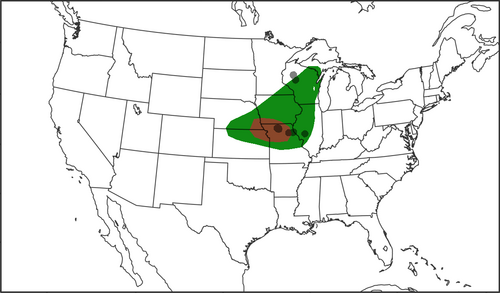

June 26th 2021

Nadocast better captured the SW-NE orientation of the line of tornado reports across IL and cut down on false alarm area in IA

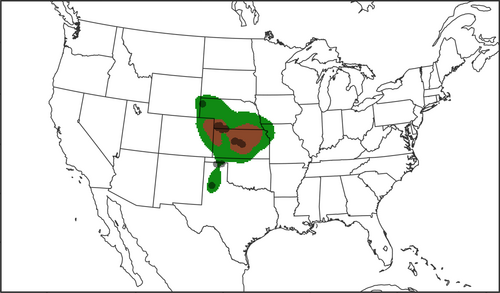

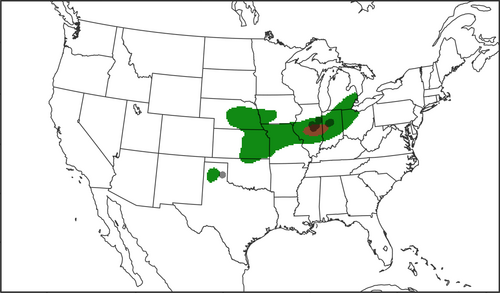

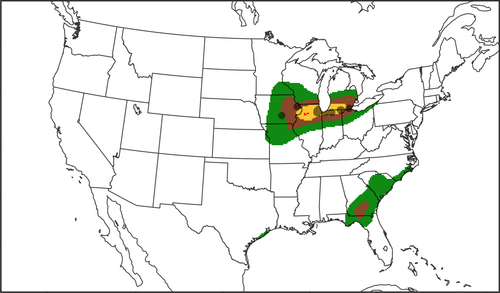

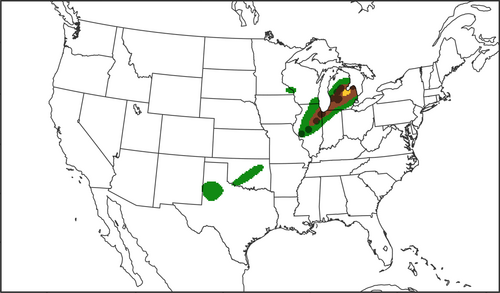

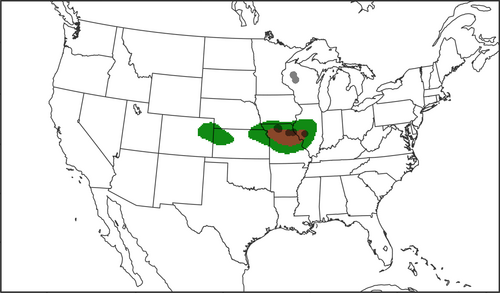

June 25th 2021

Nadocast accurately put a 5% area in the region where a cluster of tornado reports occurred in IL/IN and cut away at some false alarm area on the central Plains without missing the tornado report east of Amarillo.

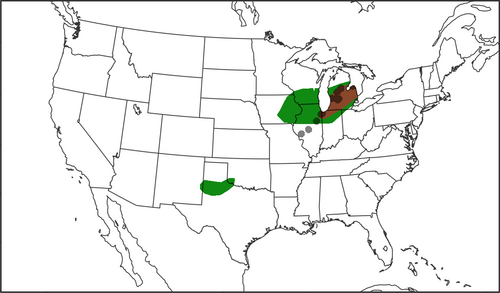

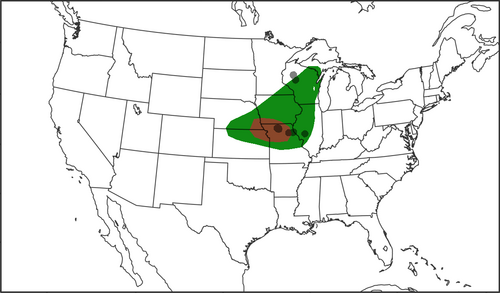

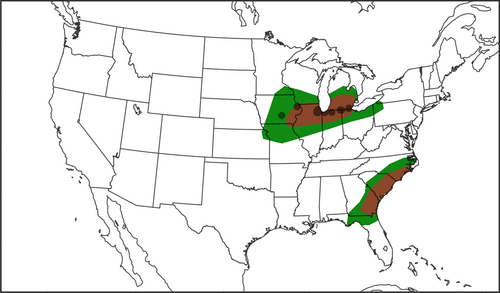

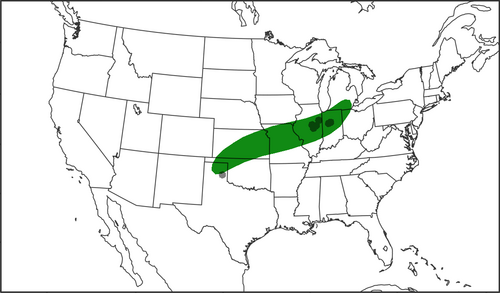

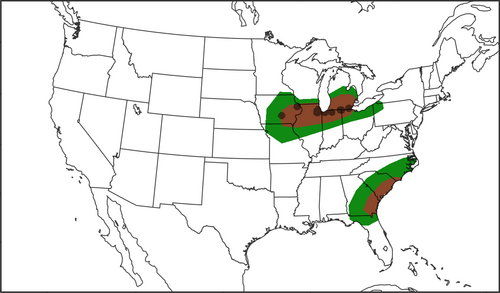

June 24th 2021

Nadocast slightly better on the placement of the 5% area and cut away at false alarm area in IA/MN/WI (although added a small false 2% area to the west)

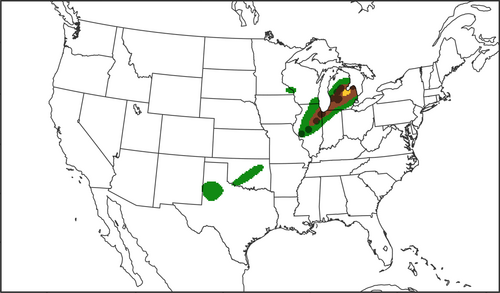

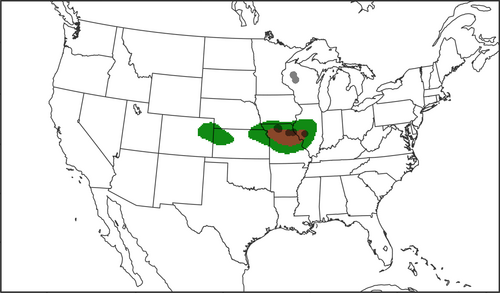

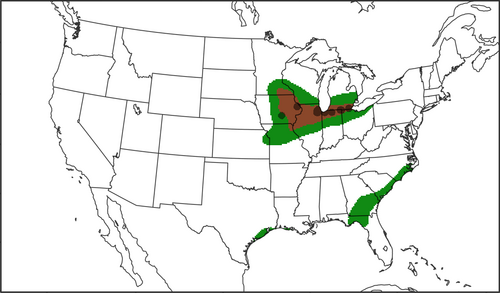

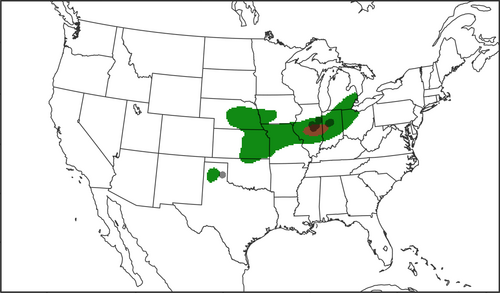

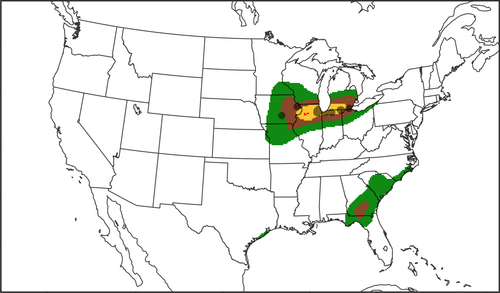

June 20th 2021

Subjective judgment here that Nadocast offered an improvement in the Midwest by painting higher probs along the axis where tornado reports occurred, but definitely an improvement in cutting down probs in the southeast US (GA/SC/NC) where no tornado reports occurred.

In the morning update, the discrepancy in the SE US became more prominent, though:

Whereas the regions in the SPC outlook increased in size, those in Nadocast decreased, providing a lower FAR for Nadocast.

Many more examples can be found in their archive, which is admittedly difficult to follow, but it seems like a small operation, so we're probably lucky to be seeing anything at all: Nadocast compared to SPC

From what I can gather, the makers of Nadocast are creating calibrated probabilistic grids of tornado probability from HREF products, likely using some degree of recent performance/AI to modify the weights from individual members, as well as trying a variety of composite products (i.e., SCP, STP, etc) to hone in on better forecasts.

Some of their forecasts are pretty impressive compared to operational products. Let's look at a few:

Image comparison format (per case): SPC 06Z Day 1 (left), 00Z nadocast for next day (right)

June 26th 2021

Nadocast better captured the SW-NE orientation of the line of tornado reports across IL and cut down on false alarm area in IA

June 25th 2021

Nadocast accurately put a 5% area in the region where a cluster of tornado reports occurred in IL/IN and cut away at some false alarm area on the central Plains without missing the tornado report east of Amarillo.

June 24th 2021

Nadocast slightly better on the placement of the 5% area and cut away at false alarm area in IA/MN/WI (although added a small false 2% area to the west)

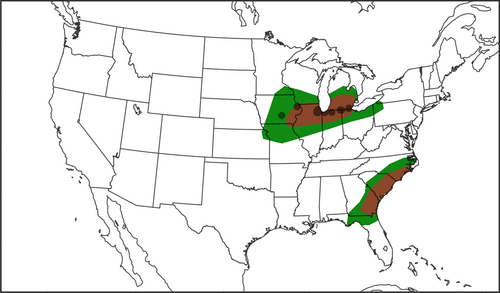

June 20th 2021

Subjective judgment here that Nadocast offered an improvement in the Midwest by painting higher probs along the axis where tornado reports occurred, but definitely an improvement in cutting down probs in the southeast US (GA/SC/NC) where no tornado reports occurred.

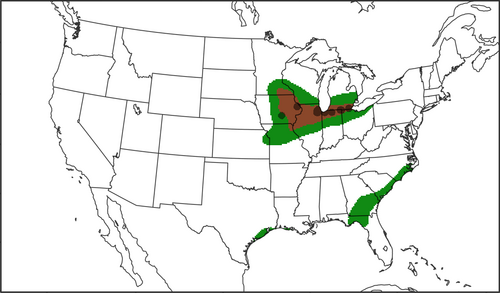

In the morning update, the discrepancy in the SE US became more prominent, though:

Whereas the regions in the SPC outlook increased in size, those in Nadocast decreased, providing a lower FAR for Nadocast.

Many more examples can be found in their archive, which is admittedly difficult to follow, but it seems like a small operation, so we're probably lucky to be seeing anything at all: Nadocast compared to SPC