While my app is not the one Rob was referring to I did release version 3.4 of "wX" ( Android only via post in this forum ) last Fall with level 2 support for ref/vel at lowest tilt. The entire L2 data file is downloaded to the device and performance is better then I expected but numerous performance tweaks are being done. I'll post some details in the next few days along with some examples. To answer one of the questions above the number of radials is 720 vs 360 for L3 base ref and the # of bins of over 1800 vs I think 460 - basically 8 times the # of total bins.

Josh

First, as far as I know the only place to actually obtain level 2 data ( as a member of the general public ) is from the Iowa Mesonet website. NWS does not appear to provide such data to the general public. If someone knows otherwise please let me know as it would be nice to have a backup in place.

With regards to performance the first item is not one you would normally expect but it makes a tangible difference. The files are stored in directories that have many files so to actually get the directory listing takes multiple seconds. Instead use the marker file in each directory and parse out the last entry. Additionally check the size of the file to make sure it’s not a transfer in progress.

The Android specific ( which could be used in general on other platforms ) optimizations are as follows. The dataset for level 2 is enormous and before I used the optimizations below it would take 1-3 minutes to render. Now it’s anywhere from 3-10 seconds. One item in particular that I didn’t think would improve performance that much ended up cutting the total render time in half. ( I don’t recall which one it was )

- Use OpenGL instead of the native canvas

- Use C code via JNI as much as possible even for color map translations

- Use preallocated ByteBuffers in Java but then populate in JNI C ( bytebuffers directly allocated avoids Java garbage collection and variable creation which is expensive in Java )

- Use OpenGL functions that then use these ByteBuffers.

- Reuse the same data structures throughout the life of the radar activity ( effectively some very large chunks of memory are being allocated outside the scope of Java )

- For bin level and color use 8 bit data values as much as possible to reduce memory consumption. I can’t recall the exact amount but even with 8 bit you could use as much as 40-50MB of memory to store the entire scene. Java doesn’t support unsigned data type so some additional considerations are needed

- For the L2 data blob itself it’s comprised of a number of chunks which are compressed. Via trial and error I found the reflectivity/velocity lowest tilt are pretty much at the beginning so you can download the entire blob but only decompress ( they are bzip2 compressed ) the first 8-10 chunks ( or something like that ). This gives a substantial performance increase.

Implement the decompression directly in C via JNI

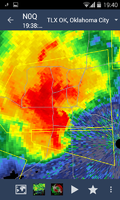

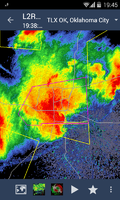

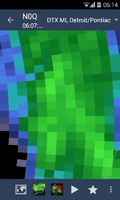

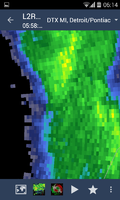

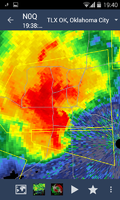

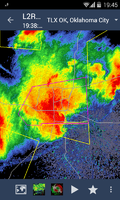

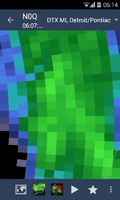

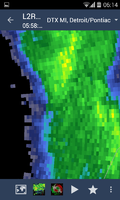

The attached images show N0Q vs L2 reflectivity from the perspective of my app ,one zoomed in very close and the other an example of a well known storm from the past week. While L2 is not the default to display it’s nice to have it as an option. FYI - this resolution is quite small for this cell phone as the device was introduced in late 2011.