Tim Vasquez

EF5

- Joined

- Dec 4, 2003

- Messages

- 3,411

I noticed that in the "best radar images" thread we have going that nearly all of them use the smoothed radar displays. Being from the old school I've always been skeptical of using smoothed algorithms, since anti-aliasing algorithms are always a mathematical approximation of actual data.

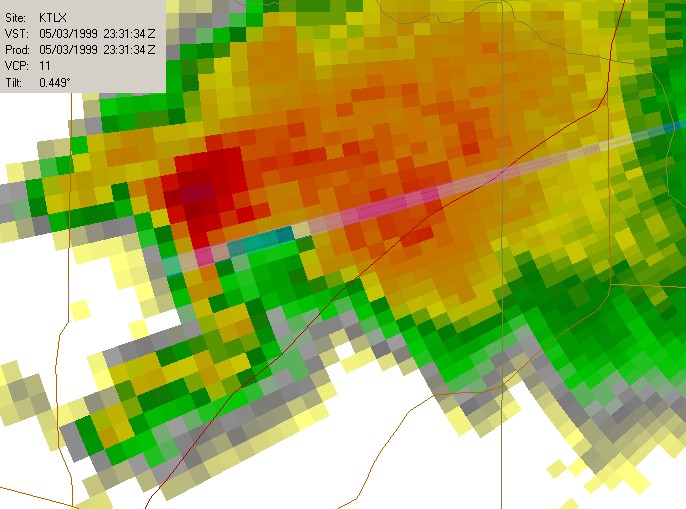

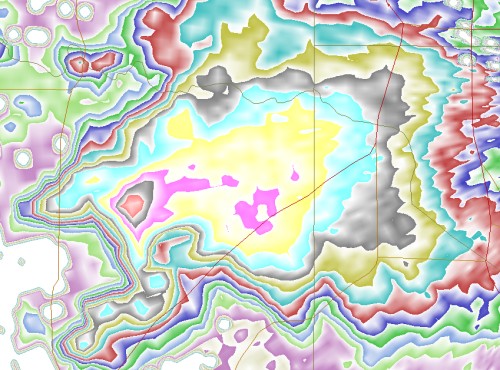

I decided to put the one in GrLevelX to the test, one-dimensionally, with a radial from this scan from 5/3/99:

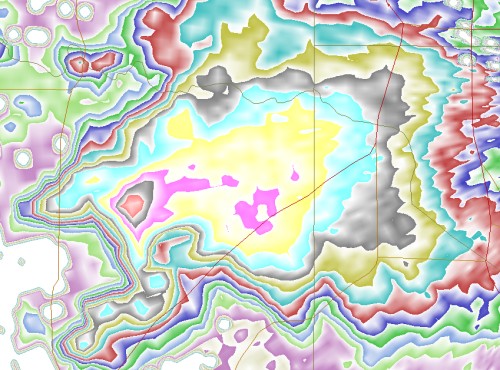

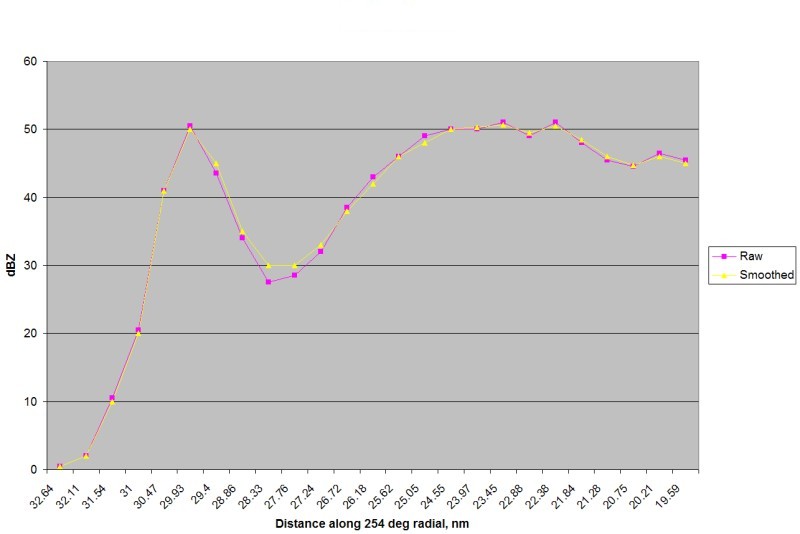

I used a special palette for the smoothed data that allowed me to quickly extract the indicated values.

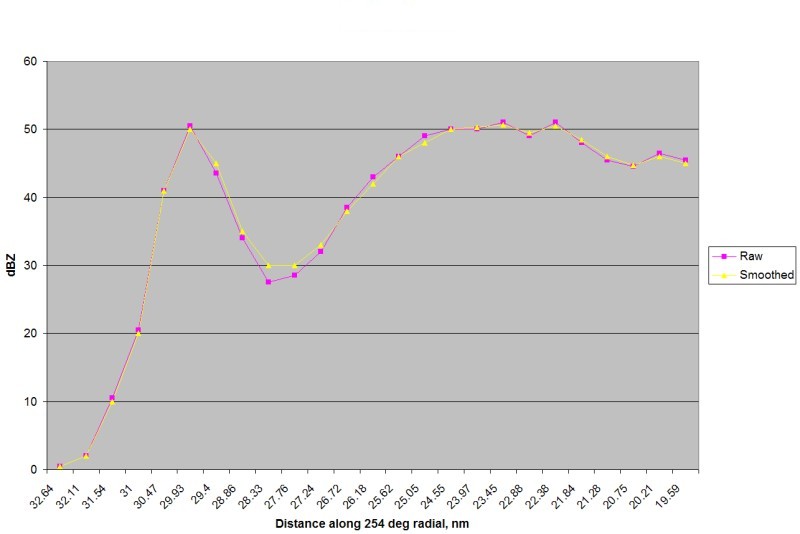

Graphed out, here's how they correlate.

Actually that's remarkably close to the actual data. I am not sure exactly what algorithm GrLevelX is using, possibly a built-in anti-aliasing function from the DirectX package, but it appears that the loss of actual data is negligible. The loss of correlation at 27 nm above is due to a sharp gradient orthogonal to the radial so I don't think it's significant.

I realize we have a pro-smoothing crowd here but I thought I'd stir up some discussion on this topic.

My stomach still sours when I see smoothing of velocity data, as point readouts of velocities can be critical, and those get lost in smoothing. You can't see gate-to-gate shear when you can't see the gates.

Tim

I decided to put the one in GrLevelX to the test, one-dimensionally, with a radial from this scan from 5/3/99:

I used a special palette for the smoothed data that allowed me to quickly extract the indicated values.

Graphed out, here's how they correlate.

Actually that's remarkably close to the actual data. I am not sure exactly what algorithm GrLevelX is using, possibly a built-in anti-aliasing function from the DirectX package, but it appears that the loss of actual data is negligible. The loss of correlation at 27 nm above is due to a sharp gradient orthogonal to the radial so I don't think it's significant.

I realize we have a pro-smoothing crowd here but I thought I'd stir up some discussion on this topic.

My stomach still sours when I see smoothing of velocity data, as point readouts of velocities can be critical, and those get lost in smoothing. You can't see gate-to-gate shear when you can't see the gates.

Tim